An experience-driven perspective on today’s toughest Agentic AI challenge, and the key factors that should guide your decision.

Over the last two years, the conversation around Agentic AI in healthcare has shifted completely. Today, no one questions its relevance, every healthcare organization already has it on its roadmap. The real question now is how to implement it effectively: should you build your own system or rely on specialized AI platforms? There is no single answer.

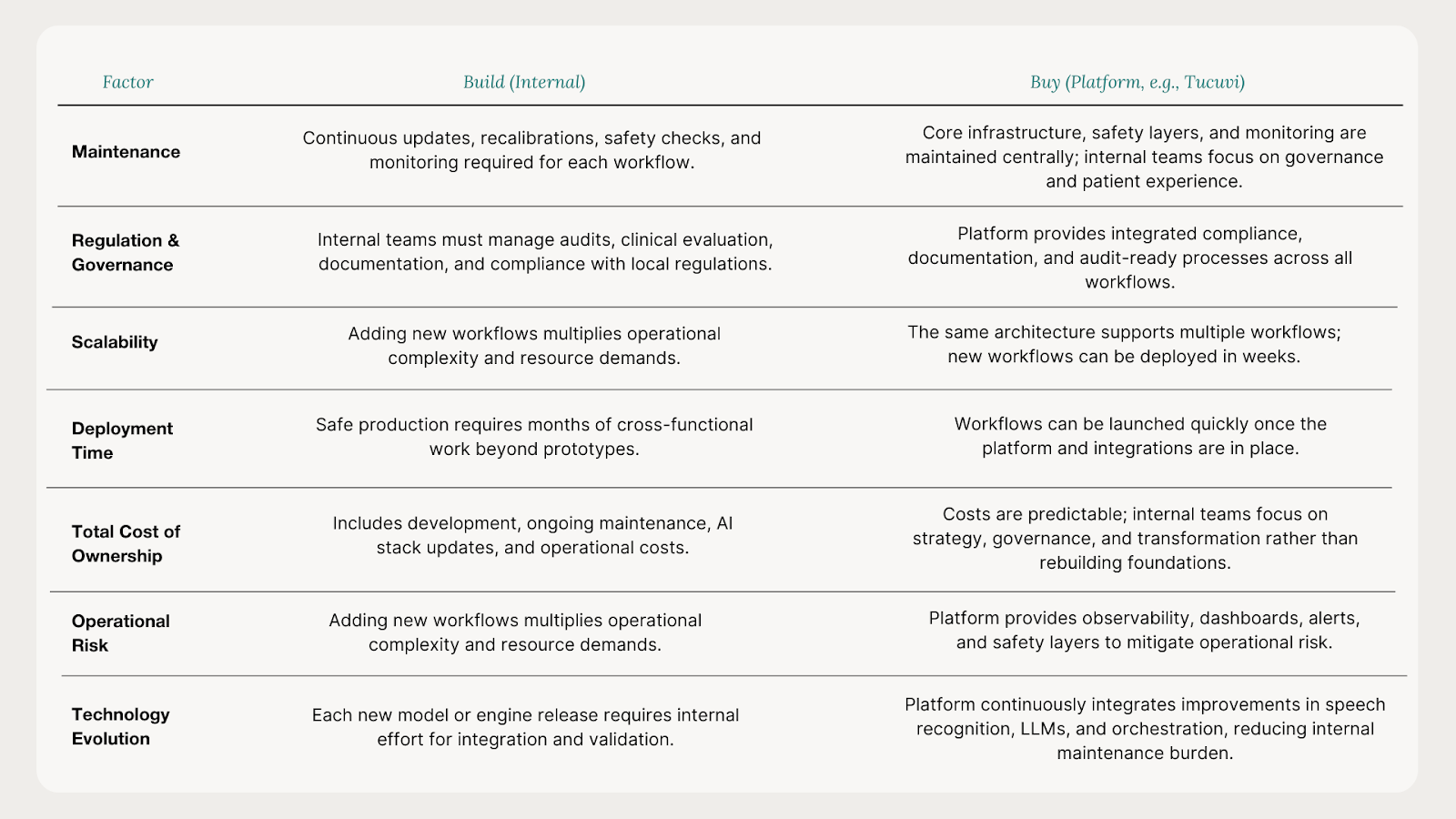

With years of experience deploying clinical and administrative voice workflows in complex healthcare environments, Tucuvi has seen that the answer is rarely a simple build-or-buy decision. The right choice depends on several factors: where the organization wants to differentiate, the complexity of the workflow, regional regulatory requirements, and the team’s capacity to maintain a fast-evolving AI system. It also requires understanding that AgenticAI is not magic, even if it may feel transformative at first glance.

Most healthcare organizations start in a similar way: a quick prototype is built, often performing surprisingly well. It can answer questions, book fake appointments, and speak naturally enough to suggest that scaling to a production-ready system might be straightforward. This early phase is exciting, but it is also when expectations begin to diverge from the realities of operating safe, stable, clinical-grade voice AI systems in real-world healthcare settings.

This article offers an insightful overview of what healthcare teams encounter when building internal clinical voice AI for phone workflows, including scheduling, care management, and remote patient monitoring.The insights are drawn from years of hands-on experience working with healthcare organizations ranging from individual clinics to large healthcare systems with networks of 50+ hospitals, as well as government institutions.

Differentiating building from prototyping:

The Challenges of Scaling AgenticAI

Most internal builds start with enthusiasm. A quick prototype can genuinely feel transformative. Teams are proud, leadership is impressed, and the organization begins imagining what could be possible if the prototype were expanded into a full clinical workflow. With enough time and talent, it may seem straightforward.

The shift happens when the team moves from the controlled environment of a demo to a real workflow. At this stage, the challenges multiply. A clinical voice AI agent must reliably identify patients, ask structured questions, interpret unstructured answers, detect symptoms, classify risk, manage appointments and FAQs, decide when to escalate, document encounters, and handle unexpected behaviors. None of this is deterministic. Standard metrics like WER stop being enough. At this point, the key questions become whether the system can reliably complete the task end-to-end, how easily it fails, how observable its failures are, and what the clinical consequences could be if it fails silently.

As complexity grows, engineers are no longer the only people involved. Clinical leaders, quality and risk teams, data protection, security, operations and call centre management all join. Meeting cadences increase. Documentation grows. The pathway design shrinks as exceptions appear. What looked like a two-week extension becomes a multi-month cross-functional project.

Eventually, a pilot arrives and the system seems ready enough to test. Then real traffic starts flowing and new challenges appear. Latency is higher than expected when calls spike. Speech recognition behaves differently with real patients than with internal volunteers. Some calls loop. Some calls drop. Some answers are misinterpreted because real-world phrasing does not match the test set. Your EHR gets thousands of requests when it should not. To address these issues, engineering needs to build observability, new dashboards, new alerts, new metrics, sometimes taking months to stabilize.

Meanwhile, the technology landscape continues evolving. Speech models get updated, telephony-optimized models appear, and major LLM updates from providers like OpenAI, Anthropic, Meta, or Mistral change performance characteristics. Systems that just passed validation can already be behind.

Upgrades introduce their own side effects. Accuracy improves in one language and regresses in another. Latency drops, but guardrails behave differently. Costs fluctuate. Small differences in transcription trigger safety filters. Accuracy compounds across steps, so a system that looks strong in isolation performs far worse across a real multi-step conversation. And none of this yet covers what happens when the system gets something clinically important wrong.

This is the reality of building AgenticAI for complex clinical workflows: workflows are complex, conversations are nuanced and the underlying AI stack evolves every quarter. It is challenging. And this is before counting integrations, privacy, security, governance, and operational training.

Why External Consultants Can Increase Complexity

Given the complexity of AgenticAI in healthcare, many organizations involve external consultants.

Expectations vary: some consultants are hired to build a first version of the system, while in other cases, they support process definition, workflow redesign, change management or governance. These represent very different types of engagements.

Consultants who build software bring frameworks and accelerators and often move quickly in the early phase. However, they do not own your clinical workflows, governance requirements, data protection constraints or long-term maintenance burden. They are not embedded within the organization when the underlying AI stack evolves months later.

The outcome is predictable: a system that performs well in a demo and acceptably in a pilot, but once the project ends, ownership shifts back to the internal team.

Internal capacity is limited, knowledge is fragmented, change requests become expensive, and within a year, the system drifts away from the state of the art.

Consultants focused on process redesign and governance can be extremely valuable. They help reshape workflows, prepare call centre teams and align stakeholders. But they cannot replace the continuous evolution and safety work required to operate an AgenticAI system in production in clinical settings.

This is not a criticism of consultants; their incentives and scope are simply different. What this section highlights and the key insight is that relying on external build partners without a long-term ownership model often creates more challenges than it solves. And it reinforces the central point: the hardest part of AgenticAI is not shipping the first version, but keeping it safe, aligned and modern while the underlying technology continues to evolve.

The real total cost of ownership

When most healthcare organisations discuss AI costs, the focus often starts on development. But development is the smallest part of the investment. By the time a team reaches a pilot, several months of work have already been invested across engineering, clinical leaders, quality, privacy, security, integrations and operations, often representing several hundred thousand dollars. Involving external consultants can increase this figure significantly. Yet this is still just the cost of turning a promising experiment into something safe to pilot. Not the cost that ultimately matters. The true cost emerges after the pilot, when the system must remain reliable while the AI stack evolves underneath it.

A clinical voice agent is a stochastic, multi-stage system built on speech recognition, voices, retrieval layers, guardrails and LLMs. None of these components evolve yearly. They evolve monthly. This rapid evolution is the most underestimated dimension of AgenticAI and the core reason maintenance dominates total cost of ownership.

The past two years illustrate this clearly. Whisper transformed multilingual transcription in late 2022. Months later, new telephony-optimised models reduced latency dramatically, large-context architectures improved continuity, and medical speech engines improved terminology recognition. A model considered state-of-the-art in early 2023 was outdated by the end of the year.

Language models have evolved even faster. Since the first GPT release, OpenAI alone has shipped nearly twenty major versions. In 2025, a dozen appeared in a single year, and most earlier versions were discontinued. A system built on what was state-of-the-art in February may need to be rebuilt, revalidated or reapproved by August. No internal roadmap is designed for this.

Each generation requires retesting, recalibrating prompts and safety layers, updating risk documentation, securing clinical sign-off and realigning operational behaviour. Because the system is non-deterministic, all of this requires evaluating behaviour under uncertainty. Unlike traditional software, where maintenance rarely exceeds 20% of build cost per year, a Clinical AgenticAI routinely requires 30-50%. A workflow that costs the equivalent of $500k to build can require another $250k per year to remain safe and current.

Operational COGS adds another layer. Speech recognition, TTS, LLM inference, retrieval and safety logic each add several cents per call. At scale, these costs matter and must be included in any honest comparison with manual workflows.

For example, a health system handling 200,000 follow-up calls each year spends hundreds of thousands on labour alone, without counting the opportunity cost of scarce clinical resources performing repetitive phone work. A well-maintained autonomous voice agent can relieve much of this burden. But that benefit appears only if the system maintains high resolution rates over time. And maintaining those rates requires keeping pace with a technology landscape that moves several times during the lifecycle of a single internally built workflow.

For many organisations, the challenge is not technical capability but operating at the necessary rhythm. AgenticAI requires maintenance cadence measured in weeks, not years. Large health systems can sometimes absorb this cadence, but many hospitals operate with small IT teams who are already stretched across multiple priorities.

Healthcare is built for safety, continuity and clinical excellence, not for constantly tracking every incremental release of the world’s fastest-moving technology ecosystem.

This is why maintenance, not development, ultimately determines the total cost of ownership, and why the build versus buy decision should be evaluated on what comes after the prototype, not the prototype itself.

Regulation, governance, and the advantage of breadth

Once organisations understand the true maintenance burden of AgenticAI, regulation and governance emerge as a critical factor. In healthcare, a voice agent that influences clinical decisions, triage or follow-up is not just software. In Europe, it is regulated as a medical device under the MDR (Medical Device Regulation). In the United States, health systems are increasingly applying internal AI governance frameworks to both external vendors and internally built models. These compliance requirements often shift the build versus buy conversation in ways that are not visible at the start of a project.

Under the MDR, clinical systems require ISO 13485 quality management, clinical evaluation, post-market surveillance, traceability, risk management and annual notified-body audits. In the US, governance frameworks introduce AI risk classification, documentation standards, monitoring, transparency, incident response, HIPAA alignment, security controls and emerging federal or state AI policies.

Maintaining these expectations year after year is a significant organisational commitment. At Tucuvi, a dedicated team is dedicated exclusively to quality, regulation, safety and AI governance. This is not ancillary work. It is central to operating a clinical voice platform that is used in real care pathways. When a health system decides to build internally, these obligations do not disappear; they become internal responsibilities, requiring dedicated teams, processes and recurring investment.

This is where breadth changes the economics. A certified platform like Tucuvi is audited as a whole, not workflow by workflow. Every improvement in safety, risk mitigation, model evaluation and regulatory review benefits all pathways. The same architecture supports post-discharge follow-up, care management, screening, scheduling, medication workflows and more. The same integration layer, orchestration engine, safety systems, clinical guardrails and monitoring frameworks are reused across dozens of workflows. Once the first workflow is live, new ones can be launched in weeks from scoping to production.

This transforms the build versus buy equation. A health system might decide that building a single workflow is feasible if viewed in isolation. But maintaining dozens at a clinically audited level, while technology shifts multiple times per year, is a different challenge. Platforms exist precisely because no internal team can sustainably rebuild infrastructure, certification processes, orchestration engines, safety layers, monitoring systems and clinical validation frameworks for every new workflow.

When regulation, maintenance and breadth are considered together, the decision becomes clearer: building may feel empowering at first, but scaling safely across a health system requires infrastructure designed for continuous innovation and clinical rigor.

A platform does this. A single internal build rarely can.

Build vs Buy? Choosing wisely in AgenticAI

Health systems should absolutely build. They should prototype, experiment and get close to the technology. There is immense value in understanding how AgenticAI behaves, where it excels, where it breaks and what it takes to operate safely. Teams that have built small internal projects make better decisions when evaluating partners because they understand both the promises and the constraints. They understand what can be achieved in a few days versus what takes months, and which expectations are realistic.

Investing in internal AI governance is equally important. The ability to evaluate risks, oversee monitoring and understand internal decision-making benefits every AI initiative. There is actually a whole build versus buy debate just within AI governance itself, but that might deserve its own article.

Internal building makes the most sense in areas where the problem is well-bounded and where failure is inexpensive. Backend processes such as data reconciliation, summarisation, reporting, and internal automation offer an environment where the AI has less room to hallucinate and failure is inexpensive. Building becomes challenging in high-dimensional, patient-facing workflows such as clinical phone calls. These workflows rely on a delicate chain of technologies that must work together in real time: speech recognition, large language models, orchestration, safety layers, escalation logic, monitoring, auditability and clinical oversight. Each component evolves rapidly, introduces complexity, and requires recurrent testing under uncertainty. Maintenance grows with every new workflow added.

For this reason, a selective approach often serves health systems best. Build where the organisation’s knowledge creates a natural advantage and where experimentation is safe. Buy where safety, regulation and rapid innovation make external platforms far more sustainable.

Some organisations will choose to build their own platforms because it aligns with their long-term strategy and they are prepared to commit the engineering, clinical and regulatory resources needed to sustain them. For most, the real question is not whether they can build one workflow, but whether they want to maintain the entire infrastructure required to support dozens.

Platforms like Tucuvi exist to relieve that burden. They provide the voice engine, the orchestration, the safety layers, the clinical guardrails, the monitoring, the regulatory documentation and the yearly audits required to operate safely at scale. They allow teams to deploy clinically validated voice agents in weeks rather than years, and let internal talent focus on governance, transformation and patient experience instead of reinventing the same foundations repeatedly.

The future of AgenticAI in healthcare is not about choosing between building or buying. It is about choosing wisely what to build and what to rely on a platform for.

Build where it sharpens your organisation. Buy where excellence demands relentless reinvention, regulatory maturity and a pace of innovation that is difficult for any single team to sustain.

And in clinical voice AI, the part that benefits from buying grows larger every quarter.